Here are some disk performance measurements (made on a Windows 10 VM on vSphere 6.5 with CrystalDiskMark) across different types of datastore (local SSD array, NFS (sync), NFS (async) and iSCSI).

The NFS and iSCSI datastores were on a Synology RS3412xs with 7x 2TB Samsung 860 EVO SSDs in a RAID5 volume. The vSphere host was connected to the Synology with 10Gbe.

The vSphere host was a Dell 720 with a PERC H710 mini disk controller (with battery-backed cache) and 4x 2TB Micron 5100 SSDs in RAID5.

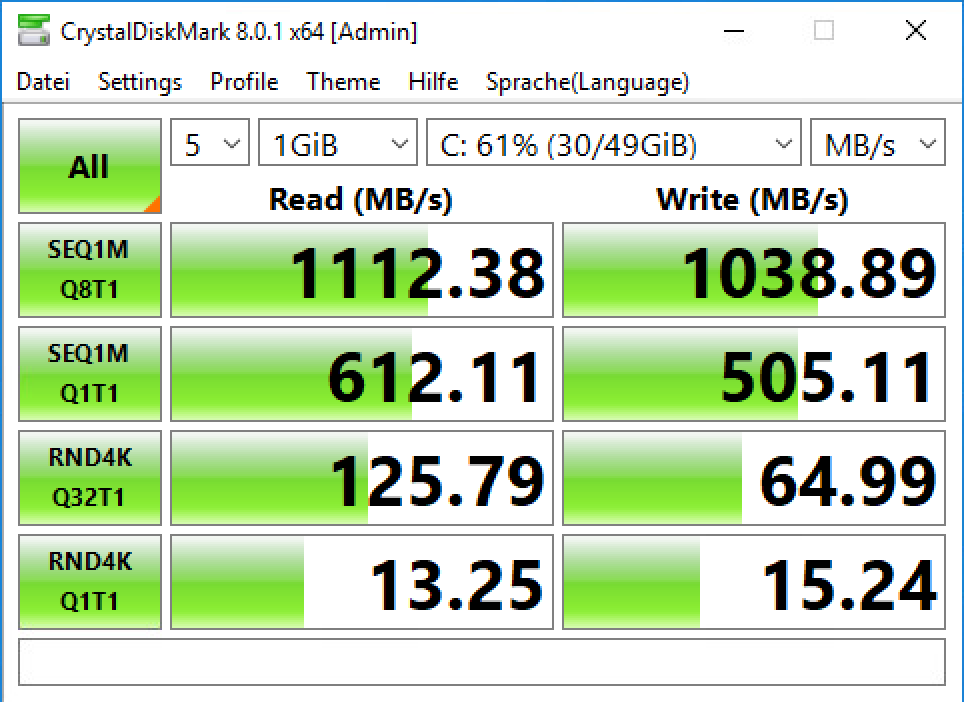

Note: Test 4, using asynchronous NFS, is not relevant for real-world VMs because a power failure is likely to corrupt VM file systems on asynchronous NFS volumes.

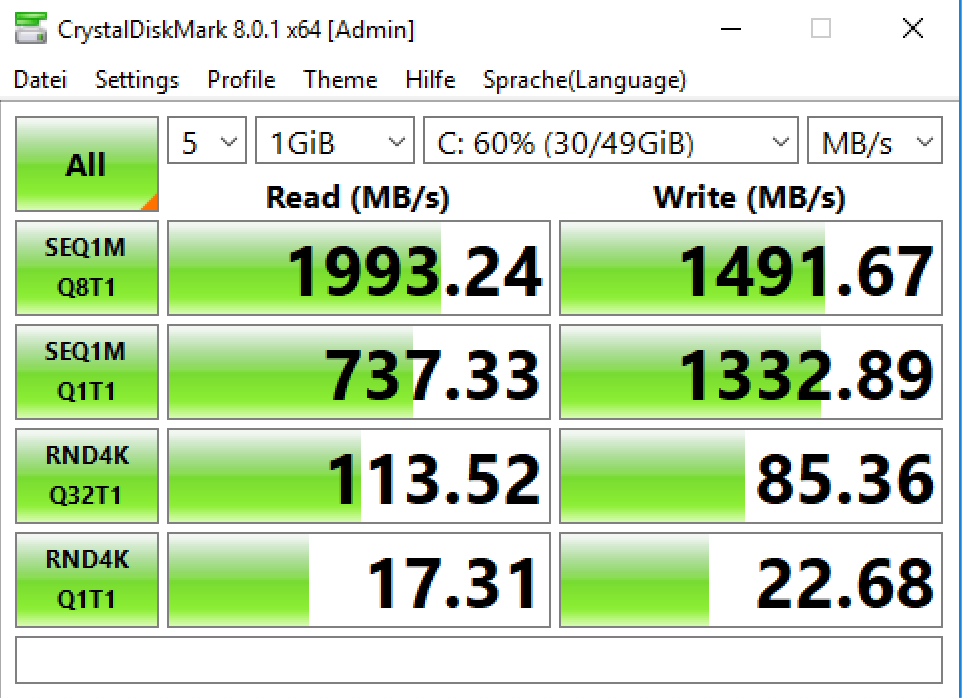

Test 1: Local SSD array datastore

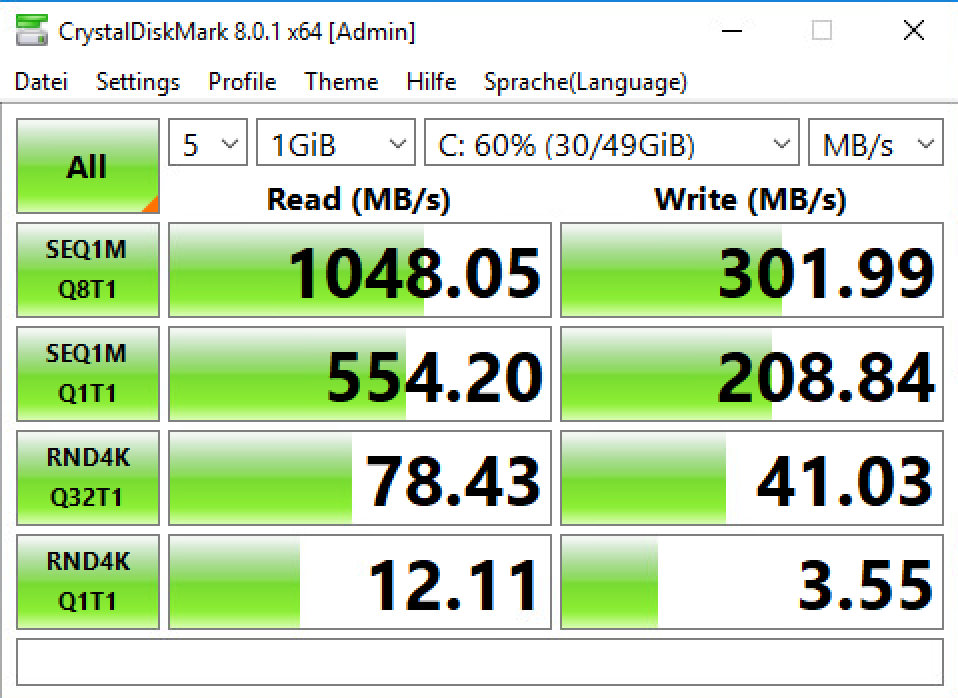

Test 2: NFS (synchronous) datastore

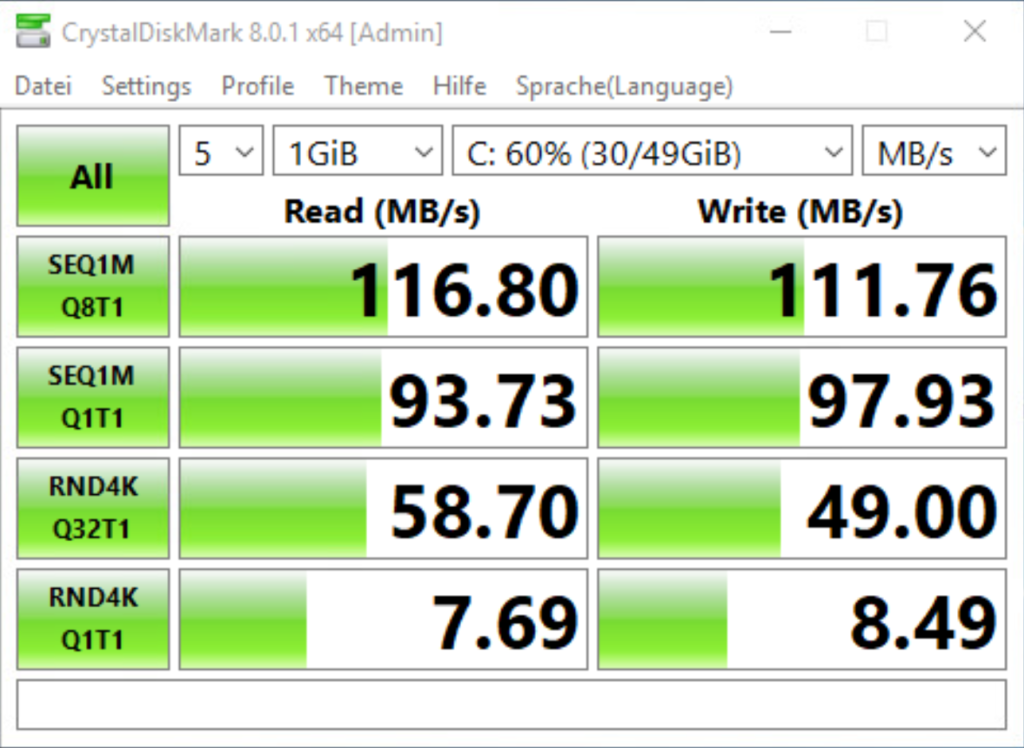

Test 3: iSCSI datastore

Test 4: NFS (asynchronous) datastore

Note! only included for comparison – async NFS is not suitable for production VMs (since a power failure will corrupt the VM’s file system due to the fact that file systems journaled writes may not be flushed to disk).

Hey Roger,

did you notice any load problems on the synology when disabling “async” on the nfs share ?

I got similar “ok” performance on a RS4021xs+ over nfs with async, but the system was nearly unusable when i disabled async.

With async disabled the load average of the synology would rise above 10 within 30 seconds and perfomance would drop down to15-30mb/s on writes…

Our setup is

13x 10TB WD Red (Raid6)

2x 1TB SSD Cache (Raid1) yeah i know this drops our raid level overall 🙂

And which test profile in CrystalDiskMark did you use ?

The iSCSI test looks suspiciously like the network link has changed from 10Gb to 1Gb